Neural nets trained to do object recognition extract non-local shape information

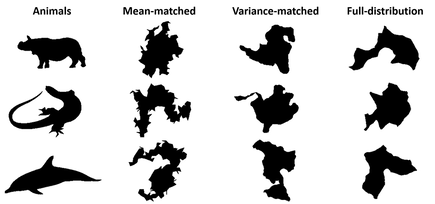

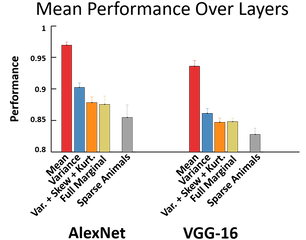

It is uncertain how explicitly deep convolutional neural networks (DCNNs) represent shape. We used animal silhouettes as well as matched controls generated by two distinct generative models of shape. The first model generates silhouettes that are matched for local curvature statistics while the second model generates sparse shape components that contain many of the global symmetries seen in animal shapes. We found that for two DCNNs pretrained for object recognition represent non-local shape information, this information becomes more explicit deeper in the network, and this information goes beyond simple global geometric regularities.

Do neural nets also learn perceptual grouping when learning object recognition?

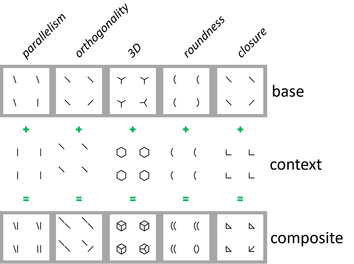

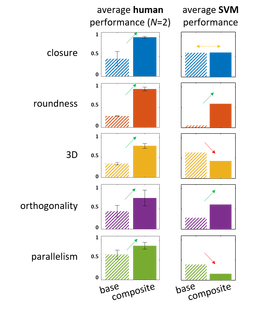

Finding a left-tilted line among right-tilted becomes easier with an “L” added to each item, transforming the task into one of finding a triangle among arrows. This configural superiority effect occurs for a wide array of stimuli, and is thought to result from vision utilizing “emergent” features (EFs), such as closure, in the composite case. A more computational interpretation can be couched in idea of a visual processing hierarchy, in which higher level representations support complex tasks at the expense of other, possibly less ecologically relevant tasks. Detecting the oddball might be inherently easier in the composite condition given the representation at some level of the hierarchy. We found that a convolutional neural net seems to compute two of the five EFs we tested. This suggests that some EFs are better represented by highest layers of the network than their base features, but it is not the complete story.

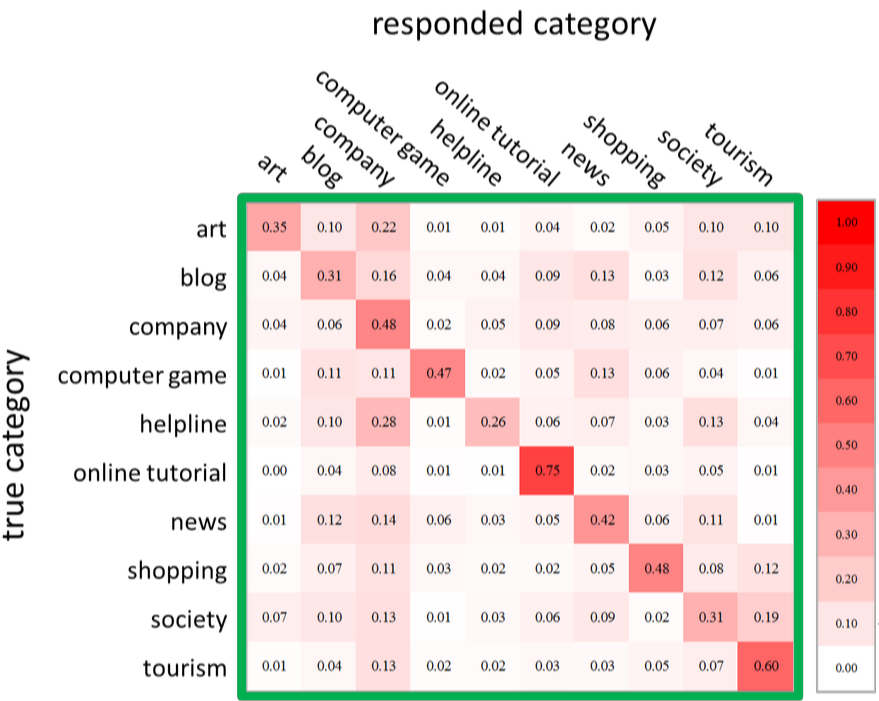

Implications of Peripheral Vision for Design

The limitations of peripheral vision place a bound on what can be done with a visual scene; there exists much work in the vision literature with artificial, psychophysics-style stimuli to better understand these limitations. Researchers in human vision, however, have rarely extended this work to more intricate and practical artificial stimuli, including designs and user interfaces. The goal of this research is to bridge this gap and study how perception works for web pages when viewed in a short time period, where most of the page is viewed peripherally. We find that observers are adept at categorizing web pages based on their purpose, their layout, and their content, despite only having a single glance at it. Interestingly, readable text plays a small but significant role in this ability.

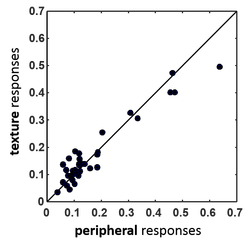

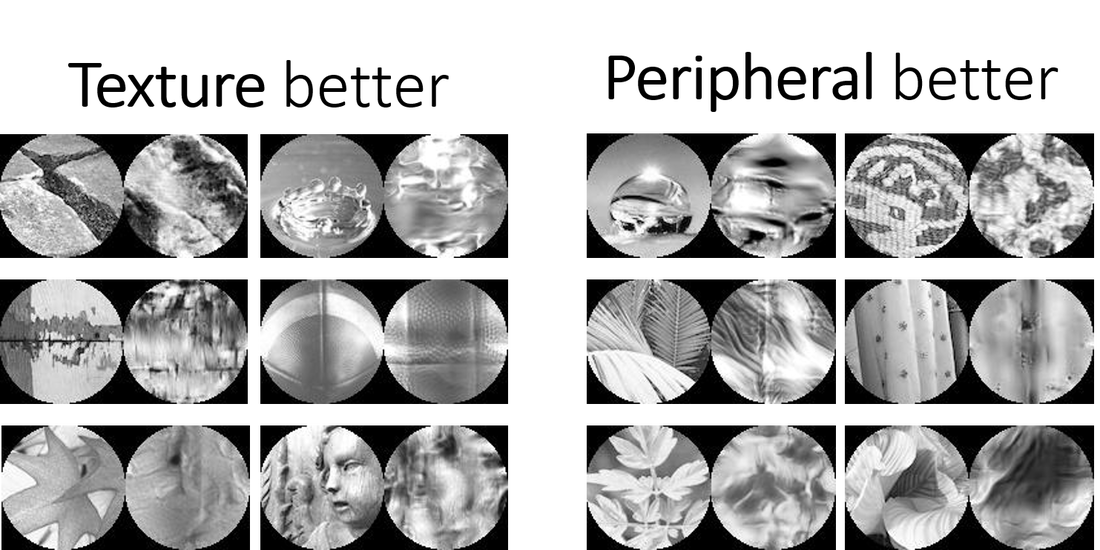

Peripheral Material Perception

Humans can rapidly detect and identify materials, such as a hardwood floor or a smooth leather jacket, in a visual scene. Prior research shows that visual texture plays an important role in how humans identify materials. Interestingly, recent models of peripheral vision suggest a texture-like encoding. This might mean that textures are well represented by peripheral vision, and therefore a natural question to ask is how well materials are perceived in the periphery, and whether that peripheral vision model can predict this performance. We found small but significant correlation between the peripheral and synthesized conditions. This provides evidence that the model can predict peripheral vision, including material perception.

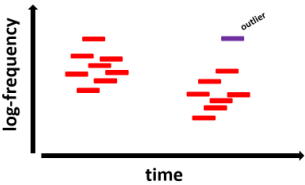

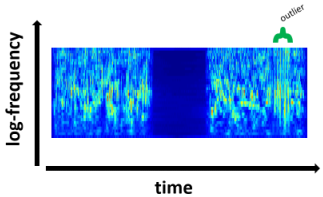

Segregation and integration in sound textures

I'm interested in how natural sounds are represented by the auditory system. Sound information is often ambiguous, and must be integrated over time and frequency to yield a reliable interpretation. However, if information originates from multiple sources, it must be separated rather than integrated in order for the sources to be accurately estimated. Sound textures present one example of this tension – textures consist of multiple events whose collective properties can be summarized with statistics, be they those of a rainstorm or a swarm of insects. Yet textures are also typically the background to other sound sources, as when a bird is audible over a babbling brook. My work aims to understand how and when the auditory system integrates and segregates sounds, for example averaging across the sound energy belonging to the brook while segregating the energy of the bird call.

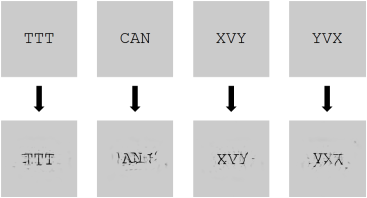

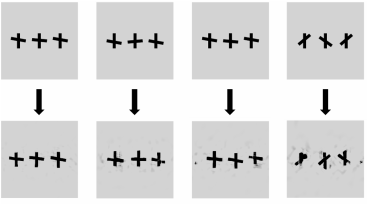

Crowding and peripheral vision

Visual crowding is a well-documented phenomenon in which objects viewed peripherally tend to get jumbled together and confused. Using a single "visual texture" model of peripheral vision, we are able to reproduce seemingly disparate crowding results from different studies. Considering peripheral vision as perception of image statistics has strong implications for how we understand the human visual system and design practical interfaces.

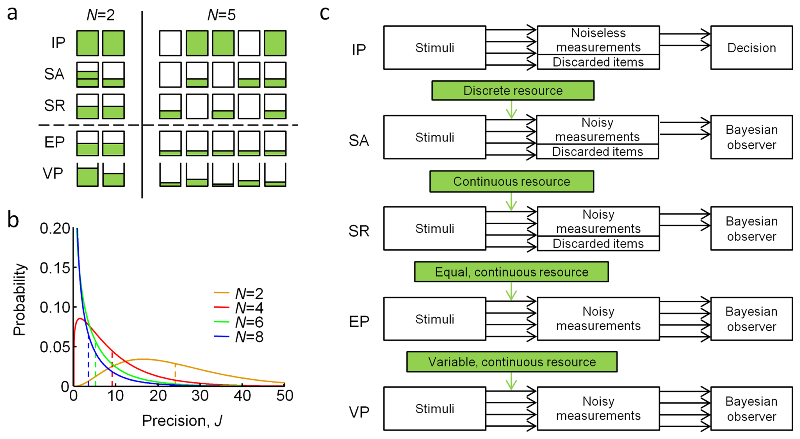

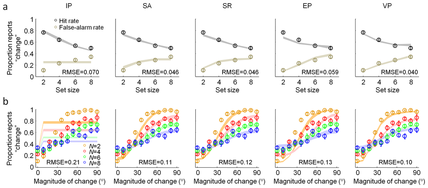

Visual working memory

Working memory is a fundamental aspect of human cognition. It allows us to remember bits of information over short periods of time and make split-second decisions about what to do next. Past work focused on modeling precisely the limitations of working memory. Specifically, we showed that performance in a change detection task is much better described as inference upon a noisy internal representation, where both the value and quality of the representation fluctuate continuously from item-to-item and trial to trial.